Mega-Prompts: Turning Expertise into Code

What are mega-prompts, why technical and non-technical folks are focusing on them, and how mega-prompts make it clear prompts will evolve to become the atomic unit of work.

I am creating a community of folks who are spending time on mega-prompts. To join, drop your details here: https://forms.gle/ARCrk4sSBw9RreLf8. Check out some of my previous views on prompt libraries here.

The field of conversational AI is moving fast. Yet, short prompts used by AI models, like GPT, have limits. They often don't fully capture what a user intends, the full context of the questions, and the thought process that should be employed to reach the output. This is where mega-prompts come in. These are longer instructions, typically above 300 words. They detail the user's intent, helping AI generate more accurate responses.

This makes sense. For the longest time, constraints in technologies have meant that we are used to building and working with technology solutions that aim to constrain and restrict the scope of interaction between human and software. We click 5 buttons to purchase something on Amazon. We use as few words as possible to search - “Restaurants open nearby” in Google Maps. We use product management and UI/UX to create complex products that look and feel simple to use for the average user.

With LLMs, our ability to express our intended outcome in natural language is now unbounded. Structured workflows within software are no longer the only way to generate machine-enabled outcomes. We can now use LLMs to help us interact with the broader world.

Software has always had one key advantage - Software has inadvertently helped to not just automate workflows but also standardize workflows. The workflows within software tools reflect a structured way for ingesting data, and producing data outputs that prevent variance in the data outputs.

When interacting with LLMs that do not have the same boundaries of workflow, the question is - how can humans interact with LLMs to ensure that the machine is able to understand the fundamental intent of what is desired? LLMs do not read minds and predict intent - they simply reproduce what is likely to be a desired output based on available data.

In the event of a highly customized intent that is not precisely worded by the user, LLMs understandably would not be able to understand the user’s intent or the context of the work. As an example, look at the previous example of a CMO mega prompt.

It is clear that if we had simply asked a general LLMs, with a 1-sentence prompt, for the output ‘give me a checklist to be a good CMO,’ and ask me any questions I need’, it is unlikely that the general LLMs would be able to have developed the implied underlying system needed to provide the type of granular output that the user truly intended.

Mega-prompts therefore provide the structured framework that bely a high-quality thought process that is able to better frame the user’s intent. As tooling becomes more powerful, the onus lands on the user to be able to more precisely express their intent.

Will Prompt Engineering ever become obsolete?

I think this is very unlikely due to the continued need for a creative expression of intent as the cost of production goes down.

“Continued Improvement of Great”: Firstly, as LLMs raise the floor of what ‘good’ performance looks like, there will always be the competitive need to increase the ceiling of what ‘great’ looks like. New, innovative ways of thinking, expressed in conjunction with sophisticated prompts, may help produce an outcome that is in line with the frontier of ‘greatness’. For example, while LLMs may replace a lot of strategy consulting work, we wouldn’t necessarily say that an LLM is a replacement for Clayton Christensson’s way of thinking. This is as LLMs are great for replicating output, but not determining what ‘great’ looks like.

“Last-Mile Fine-Tuning”: Secondly, niche use cases will always emerge which require the last mile of contextual fine-tuning. This fine-tuning requires business expertise, logic, and context, which can be expressed through mega-prompts. Fine-tuning isn’t just about understanding a sample output, but also about the thought processes behind a great output. Embedding thought processes and process supervision for fine-tuning purposes will be one of the most important areas of development, as OpenAI noted in May.

& “Workflows are documented, thought-processes are not”: While many workflows may be documented and executed through SaaS, many complex thought processes that experts have in their heads are not documented. SaaS is simply workflow encapsulated by products, while mega-prompts are thought processes encapsulated by words. Think crisis management, private equity, branding strategy, etc. While textbooks may exist for some of these niche industries (if at all), many of these mental models/ thought processes are largely passed down through experience and work, with textbooks and formal workflows either general or too high level, since they require a high level of contextual information at each stage of the thought process, which humans are able to actively seek out. A large part of the difficulty of capturing and digitizing thought processes is the sheer level of unstructured data, both as a function of data input, and data output.

For example, while Clayton Christensson bots popped up about 10 seconds after GPT’s APIs went live, it is clear that Clayton-bot’s outputs were extremely limited in scope, and certainly not used for solving real problems. It is limited in scope because it is trying to replicate an output it does not understand the methodology of and because Clayton’s written methodologies are likely at too high a level to apply. Clayton had been optimizing for humans to understand his work and certainly hadn’t written his work in a way that helps LLMs maximize a replication of his exact thought process.

“There are different expert philosophies for the same problem”: Thirdly, even in the event of AGI, many people will have different thought processes they want to incorporate into an output. Domain experts in the event of AGI, and in the medium term of LLMs, will likely change insofar as the most effective experts are those who are able to best communicate and incorporate their decision processes to work with and through LLMs.

Back to the Clayton analogy, smart, reasonable people will weigh different input factors differently largely due to philosophical reasons, even with the same information and problem. Clayton Christensson utilizing his thought process will come up with a different answer than say, Bill Ackman. Both will look to incorporate and digitize different thought processes to the same answer, and potentially/likely reach different conclusions they are comfortable with.

Chain of thought reasoning, as replicated somewhat through Mega-Prompts, is thus the most likely way we should see sophisticated/expert thought processes captured by LLMs, as humans get accustomed to incorporating thought processes rather than simply workflows digitally. (To be clear - I am not saying chain-of-thought reasoning is the best or most efficient way to fine-tune LLMs, simply that it is probably a key component in a specific task - helping experts digitize and replicate their thought processes at scale)

So what should a Mega-Prompt look like?

Mega-prompts reflect an opinion and additional context through 3 things:

The context of which the LLM should best approach the question, including but not restricted to:

The Persona the LLM should take

“You are a backend technology consultant”

The goals of the user

“I am looking to optimize my cloud storage system, focusing on reducing cost over speed as I am budget but not time sensitive.”

The process in which different elements of the answer should be obtained.

The chain-of-thought reasoning that should be employed

“First, examine the structure of my systems, being conscious of X”

“Second, compare that to the infrastructure design of some of my competitors, particularly Y & Z”

The structure of the back-and-forth, including which elements of the answer should be confirmed before proceeding

“Generate a number of additional questions that would help more accurately answer the question”

“Before proceeding to the cost calculations, please summarize the pros and cons of different systems, and confirm what factors I care more or less about.

The format & structure of the answer

Vocabulary, grammar, etc

“Use short sentences and explain any acronyms as my audience is non-technical”

Any constraints and guardrails that should be noted in the answer

“Avoid relying on any information prior to 2018 as a new advancement in 2019 has made all developments prior redundant”

“Please use this template that I rely on for the format of your answer”

“From now on, whenever you generate code that spans more than one file, generate a Python script that can be run to automatically create the specified files or make changes to existing files to insert the generated code.”

Do mega-prompts meaningfully change output?

(Source: https://www.makerbox.club/marketing-mega-prompts)

As we can see, mega-prompts dramatically increase the quality of the output, as judged by the user, particularly for qualitative and creative tasks.

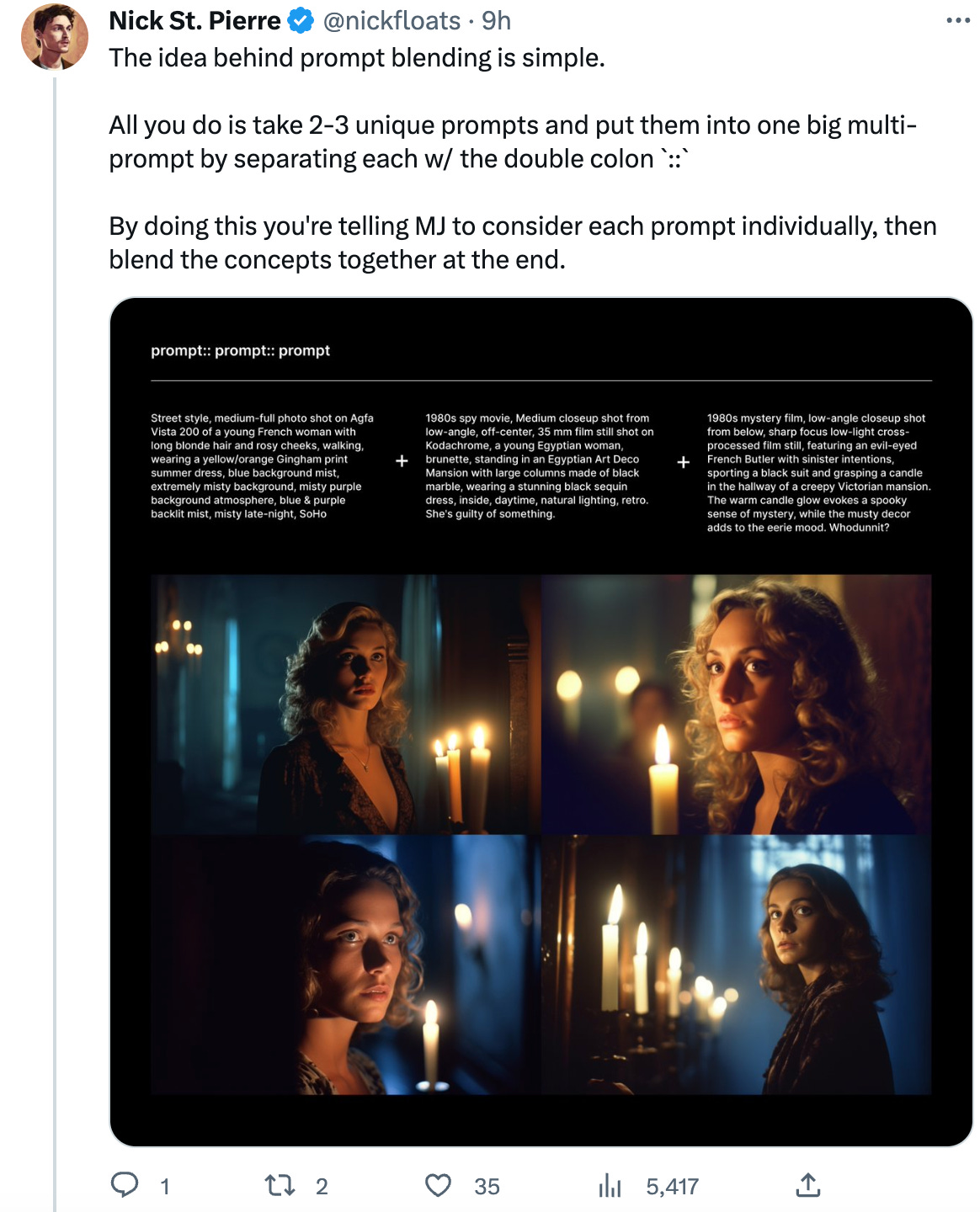

Although many of the cited examples above are text-creation, we can also see them being extremely relevant for image generation.

The above describes a workflow encapsulated into a series of prompts

Over time, we are likely to see prompts evolve from one-sentence prompts, to mega-prompts, to chained prompts, as prompts become the atomic unit of work.

I have seen folks from all types of industries and backgrounds working on mega-prompts and am creating a community of folks (you don’t have to be technical) who are spending time on mega-prompts. To join, drop your details here: https://forms.gle/ARCrk4sSBw9RreLf8

As a long time coder, I am beginning to feel like Paul Bunyan.

Thanks for such a comprehensive explanation. Reminds me that while ChatGPT can be a great intern, great intern outputs are only as good as their inputs. (Also, Christensen is the correct spelling of Clay’s last name - I work for the non-profit he co-founded ChristensenInstitute.org .)